The Development Setup

At the time of writing this series none of the Rust integration has made it into mainline yet, so to obtain a Rust-enabled kernel one needs either the most recent patchset from the kernel mailing list or the tree from Rust for Linux repo:

$ git remote add rust https://github.com/Rust-for-Linux/linux.git

The code is still experimental so it would probably be unwise to unleash it on a live system. I picked an old VM I had lying around for debugging kernel issues; I found Arch particularly suitable for this task as it has hooks for adding unpackaged kernels and initrds, and stays out of your way when modifying the system.

What’s interesting about the Rust for Linux repository is that the maintainers use Github for tracking issues and will actually consider pull requests submitted there. The project of course has a Mailing list, as is tradition, and new iterations of the patchset will be posted on the LKML.

After launching menuconfig on the rust branch, chances are none of

the Rust related options are shown. This is because they depend on

CONFIG_RUST_IS_AVAILABLE=y which is determined at config time.

The rustavailable target can be used to check whether Kconfig

considers Rust “available”:

$ make LLVM=1 rustavailable

***

*** Rust bindings generator 'bindgen' is too new. This may or may not work.

*** Your version: 0.60.1

*** Expected version: 0.56.0

***

Rust is available!

A slight toolchain mismatch but it works out in practice. Besides

bindgen a number of other tools need to be present that aren’t

usually required for building the kernel: llvm, clang, the

Rust compiler and source code. Not just any version will do, it needs

to be a fairly recent Rust 1.62 toolchain. A stable toolchain, that

is, which doesn’t require any out-of-tree patches anymore as all the

necessary changes seem to have been merged into Rust at this point.

It does enable a number of unstable features

though.

The quick-start guide

has detailed instructions on how to obtain the right versions of these

tools; in most cases it will boil down to feeding the output of a

script into rustup.

Passing LLVM=1 is mandatory; fingers crossed the LLVM dependency

will be replaced by GCC-rs at

some point in the future.

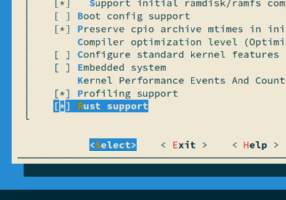

With all the prerequisites in place, the Rust related menu entries

appear. Be sure to enable the examples under “Kernel hacking → Sample

kernel code → Rust samples” as well. With CONFIG_RUST=y set, the

kernel can now be built with the usual

make && make modules && make bzImage sequence. No additional

commands are required – which confirms the impression that Rust

support is not just bolted on but first-class.

The resulting additions to the config look something like this:

$ zgrep _RUST /proc/config.gz

CONFIG_RUST_IS_AVAILABLE=y

CONFIG_RUST=y

CONFIG_RUSTC_VERSION_TEXT="rustc 1.62.0 (a8314ef7d 2022-06-27)"

CONFIG_HAVE_RUST=y

CONFIG_SAMPLES_RUST=y

CONFIG_SAMPLE_RUST_MINIMAL=m

CONFIG_SAMPLE_RUST_PRINT=m

CONFIG_SAMPLE_RUST_MODULE_PARAMETERS=m

CONFIG_SAMPLE_RUST_SYNC=m

# […] skip some samples

# CONFIG_RUST_DEBUG_ASSERTIONS is not set

CONFIG_RUST_OVERFLOW_CHECKS=y

# CONFIG_RUST_BUILD_ASSERT_ALLOW is not set

# CONFIG_RUST_BUILD_ASSERT_WARN is not set

CONFIG_RUST_BUILD_ASSERT_DENY=y

During the build various Rust related tools will then show up as “quiet commands”:

DESCEND objtool

CALL scripts/atomic/check-atomics.sh

CALL scripts/checksyscalls.sh

RUSTC L rust/bindings.o

EXPORTS rust/exports_bindings_generated.h

RUSTC L rust/kernel.o

EXPORTS rust/exports_kernel_generated.h

CHK include/generated/compile.h

The Kernel-Side Rust Environment

Enabling Rust results in a large number of symbols getting added, some unmangled and readable but many more mangled ones:

0x00000000 _RNvXs_NtCs3yuwAp0waWO_4core5asciiNtB4_13EscapeDefaultNtNtNtNtB6_4iter6traits12double_ended19DoubleEndedIterator9next_back vmlinux EXPORT_SYMBOL_GPL

Now wait, this doesn’t resemble traditional Rust mangling at all!

Where are my dollar signs‽

Rather, this looks a lot like the still somewhat experimental v0

mangling style.

And sure enough, a comment in rust/exports.c

confirms this. Mangled symbols are quite unwieldy compared to the

flat C style symbols; the longest name in my kernel’s

vmlinux.symvers is 163 characters long which exceeds the cap on

symbol length in current mainline kernels (128).

Part of the Rust support is a set of patches that lift these size

requirements and raise the limit to 512 characters.

Ultra long symbol names notwithstanding, the impact of Rust on kernel

image size is moderate.

My stripped down version of the Archlinux .config results in a

zlib compressed image of 7'953'536 bytes with a regular v5.19 kernel,

and 8'131'232 bytes with CONFIG_RUST=y; CC=clang was used in

both cases.

The makefile sets some rather exotic flags on the core and

alloc crates:

core-cfgs = \

--cfg no_fp_fmt_parse

alloc-cfgs = \

--cfg no_global_oom_handling \

--cfg no_rc \

--cfg no_sync

no_fp_fmt_parse is self-explatory in kernel context where we

don’t have floating point arithmetic at our disposal, so it doesn’t

come as a surprise that the flag was added to Rust explicitly for

that purpose.

Likewise no_global_oom_handling

which seems to have originated in a comment of Linus’ on an earlier

stage of the Rust patchset.

It disables a large number of “pseudo-infallible” APIs like for e. g.

Box::new() which simply panic!() on OOM.

Part two of this series will discuss in more detail what effects this

has on how to write Rust code to run in kernel space.

Finally, the alloc::sync and alloc::rc modules are filtered

out because the kernel provides its own implementations for

refcounting and synchronization primitives.

As for the tooling, both rustfmt and clippy are made available

through build rules, but they don’t seem to be integrated in

checkpatch.pl yet. (It does perform some basic checks on Rust

files though, for example the validation of SPDX headers.)